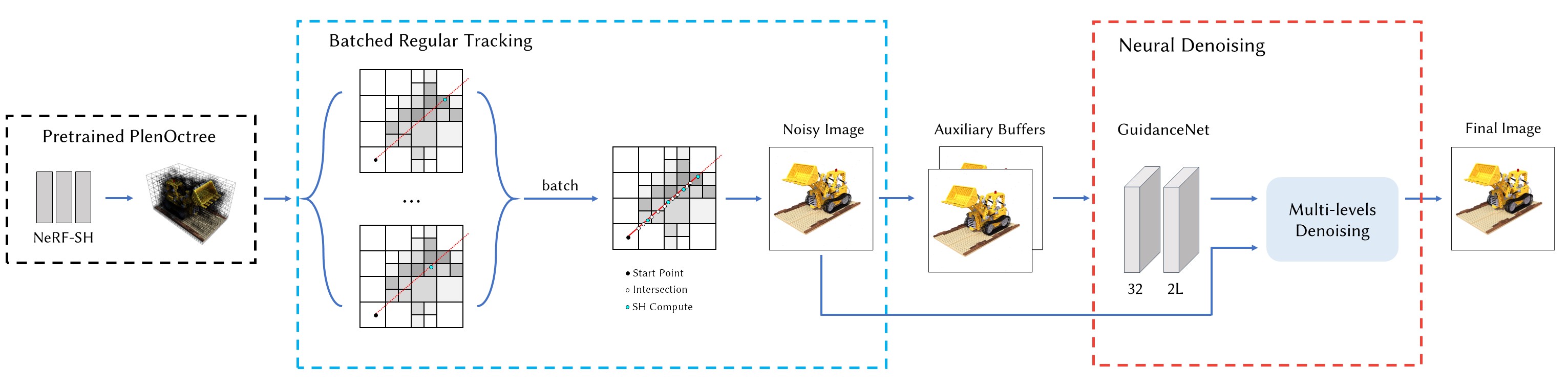

Neural Radiance Fields (NeRF) has demonstrated its ability to generate high-quality synthesized views. Nonetheless, due to its slow inference speed, there is a need to explore faster inference methods. In this paper, we propose RT-Octree, which uses batched regular tracking based on PlenOctree with neural denoising to achieve better real-time performance. We achieve this by modifying the volume rendering algorithm to regular tracking. We batch all samples for each pixel in one single ray-voxel intersection process to further improve the real-time performance. To reduce the variance caused by insufficient samples while ensuring real-time speed, we propose a lightweight neural network named GuidanceNet, which predicts the guidance map and weight maps utilized for the subsequent multi-layer denoising module. We evaluate our method on both synthetic and real-world datasets, obtaining a speed of 100+ frames per second (FPS) with a resolution of 1920x1080. Compared to PlenOctree, our method is 1.5 to 2 times faster in inference time and significantly outperforms NeRF by several orders of magnitude. The experimental results demonstrate the effectiveness of our approach in achieving real-time performance while maintaining similar rendering quality.

@inproceedings{shu2023rtoctree,

title={RT-Octree: Accelerate PlenOctree Rendering with Batched Regular Tracking and Neural Denoising for Real-time Neural Radiance Fields},

author={Shu, Zixi and Yi, Ran and Meng, Yuqi and Wu, Yutong and Ma, Lizhuang},

booktitle={SIGGRAPH Asia 2023 Conference Papers},

pages={1--11},

year={2023}

}